Ubuntu Server24.04安装Kubernetes(k8s v1.35.0)高可用集群

为了更好的体验,欢迎访问凯尔森个人主页http://www.huerpu.cc:7000/

一、总体概览

本着学习新技术和使用新版本的原则,本K8S集群选用刚刚发布的k8s v1.35.0,系统版本选用了Ubuntu Server24.04也是最新版本的LST系统。

3台master主节点(2C4G)、4台(2C4G)worker node,如果CPU低于2核心是没法初始化K8S的,根据个人硬件配置建议尽可能多核心大内存,对应的 IP如下:

| hostname | IP | function | Version |

|---|---|---|---|

| VIP | 192.168.31.200 | lb.k8s.hep.cc | — |

| hep-k8s-master01 | 192.168.31.201 | Control plane | Ubuntu Server24.04 |

| hep-k8s-master02 | 192.168.31.202 | Control plane | Ubuntu Server24.04 |

| hep-k8s-master03 | 192.168.31.203 | Control plane | Ubuntu Server24.04 |

| hep-k8s-worker01 | 192.168.31.204 | worker node | Ubuntu Server24.04 |

| hep-k8s-worker02 | 192.168.31.205 | worker node | Ubuntu Server24.04 |

| hep-k8s-worker03 | 192.168.31.206 | worker node | Ubuntu Server24.04 |

| hep-k8s-worker04 | 192.168.31.207 | worker node | Ubuntu Server24.04 |

hep-k8s-master01、hep-k8s-master02、hep-k8s-master03为三台master节点,hep-k8s-worker01、hep-k8s-worker02、hep-k8s-worker03、hep-k8s-worker04为四台worker节点。VIP地址192.168.31.200,采用kube-vip的方式。这套配置考虑了各种安全与可靠性,可以应对中小型企业应用,甚至是微大型企业也是可以的。

所有这些机器都部署在PVE上,采用 Intel(R) Xeon(R) CPU D-1581 @ 1.80GHz (1 Socket),16核心32线程,后续如果想增加worker节点,比如闲置的迷你主机、笔记本、工作站、服务器,都可以自行加入K8S集群,从而就可以在K8S集群上部署各种应用,然后就可以愉快的玩耍啦。

二、前置工作

我的K8S集群节点都在PVE上,为了更方便,那些重复性的工作我就放在一台机器hep-k8s-master-worker-temp上执行,然后直接复制虚拟机,大大提高效率。如果你是单独的机器,可以在机器上重复执行这些命令即可,从而达到机器配置的一致性。

2.1 基础环境配置

# 切root

sudo su -

# 更新

apt update

apt upgrade -y

# 设置主机名

hostnamectl set-hostname hep-k8s-master01

# 配置 hosts 解析

cat >> /etc/hosts << EOF

192.168.31.200 lb.k8s.hep.cc

192.168.31.201 hep-k8s-master01

192.168.31.202 hep-k8s-master02

192.168.31.203 hep-k8s-master03

192.168.31.204 hep-k8s-worker01

192.168.31.205 hep-k8s-worker02

192.168.31.206 hep-k8s-worker03

192.168.31.207 hep-k8s-worker04

EOF

# 设置时间同步

timedatectl set-timezone Asia/Shanghai

# 查看时间

date

#安装ntpdate命令

apt install ntpdate -y

ntpdate ntp.aliyun.com

# 创建加载内核模块文件

cat << EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

# 手动加载模块

modprobe overlay

modprobe br_netfilter

# 查看已加载模块

lsmod | egrep "overlay"

lsmod | egrep "br_netfilter"

# 添加网桥过滤及内核转发配置文件

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 生效配置

sysctl --system

# 安装 ipset 及 ipvsadm

apt install ipset ipvsadm -y

# 配置 ipvsadm 模块加载

cat << EOF | tee /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

# 创建加载模块脚本文件

cat << EOF | tee ipvs.sh

#!/bin/sh

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

# 执行脚本文件加载模块

sh ipvs.sh

# 关闭 Swap 分区

# 临时关闭

swapoff -a

# 永久关闭(注释 swap 行)

sed -i '/swap/s/^/#/' /etc/fstab

# 验证(无输出即成功)

cat /etc/fstab | grep swap2.2 配置docker、cri-dockerd

# 配置docker

# 添加 Docker 官方 GPG 密钥

apt update

apt install ca-certificates curl -y

install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

chmod a+r /etc/apt/keyrings/docker.asc

# 将仓库添加到 Apt 源

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] \

https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# 更新源

apt update

# 安装 Docker 软件包

apt install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y

# 查看版本

docker --version

# 配置cri-dockerd

# 克隆 cri-dockerd 代码仓库

git clone https://github.com/Mirantis/cri-dockerd.git

# 下载指定版本的 cri-dockerd 压缩包,这里有编译好的二进制文件

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.21/cri-dockerd-0.3.21.amd64.tgz

# 解压压缩包,解压会直接生成cri-dockerd的二进制文件到cri-dockerd/目录下

tar xf cri-dockerd-0.3.21.amd64.tgz

# 进入目录并查看内容

# 在cri-dockerd/目录下,有cri-dockerd的二进制文件

# 在cri-dockerd/packaging/systemd/下,有cri-docker.service、cri-docker.socket两个配置文件,待会要用

cd cri-dockerd/

ls

# 在cri-dockerd/目录下,执行。安装 cri-dockerd 可执行文件

install -o root -g root -m 0755 cri-dockerd /usr/local/bin/cri-dockerd

# 复制cri-dockerd/packaging/systemd/下cri-docker.service、cri-docker.socket两个配置文件到/etc/systemd/system

cp packaging/systemd/* /etc/systemd/system

# 修正服务文件中的可执行路径

sed -i -e 's,/usr/bin/cri-dockerd,/usr/local/bin/cri-dockerd,' /etc/systemd/system/cri-docker.service

# 配置 Pod 基础设施容器镜像

# 修改第 10 行的ExecStart配置,添加--pod-infra-container-image=registry.k8s.io/pause:3.10.1

vim /etc/systemd/system/cri-docker.service

ExecStart=/usr/local/bin/cri-dockerd --pod-infra-container-image=registry.k8s.io/pause:3.10.1 --container-runtime-endpoint fd://

# 设置为开机自启动并且现在就启动

systemctl enable --now cri-docker.socket

# 验证 cri-dockerd 状态,输出 active (running) 即成功

systemctl daemon-reload

systemctl status cri-docker2.3 配置GPG&apt仓库

# 获取 Kubernetes 官方 GPG 验证密钥

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.35/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

# 添加 Kubernetes 1.35 版本的 apt 仓库

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.35/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

# 更新apt包索引

apt update

# 查看kubeadm的包策略(状态与版本来源)

apt-cache policy kubeadm

# 查看 kubeadm 的详细信息与依赖关系

# apt-cache showpkg kubeadm

# 查看 kubeadm 的可用版本列表

# apt-cache madison kubeadm三、准备Master&Worker节点

复制hep-k8s-master-worker-temp虚拟机,右键clone即可,然后修改主机名、IP地址。一共复制出三台Master四台Worker即可,这些节点都有上面配置好的内容。如果你是独立的Linux,可以在每台机器上都执行一下步骤二的所用内容。

3.1 设置主机名&固定IP地址

# hep-k8s-master01节点

# 设置主机名

hostnamectl set-hostname hep-k8s-master01

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-master01节点IP固定位192.168.31.201

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.201/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot

# hep-k8s-master02节点

# 设置主机名

hostnamectl set-hostname hep-k8s-master02

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-master02节点IP固定位192.168.31.202

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.202/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot

# hep-k8s-master03节点

# 设置主机名

hostnamectl set-hostname hep-k8s-master03

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-master03节点IP固定位192.168.31.203

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.203/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot

# hep-k8s-worker01节点

# 设置主机名

hostnamectl set-hostname hep-k8s-worker01

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-worker01节点IP固定位192.168.31.204

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.204/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot

# hep-k8s-worker02节点

# 设置主机名

hostnamectl set-hostname hep-k8s-worker02

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-worker02节点IP固定位192.168.31.205

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.205/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot

# hep-k8s-worker03节点

# 设置主机名

hostnamectl set-hostname hep-k8s-worker03

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-worker03节点IP固定位192.168.31.206

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.206/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot

# hep-k8s-worker04节点

# 设置主机名

hostnamectl set-hostname hep-k8s-worker04

# 固定IP地址,你的可能不叫50-cloud-init.yaml,但都在/etc/netplan/,看你的是哪个。

# hep-k8s-worker04节点IP固定位192.168.31.207

vim /etc/netplan/50-cloud-init.yaml

network:

ethernets:

ens18:

dhcp4: false

addresses: [192.168.31.207/24]

gateway4: 192.168.31.2

nameservers:

addresses: [192.168.31.1,8.8.8.8]

version: 2

# 重启生效

reboot3.2 开放端口号

为了集群的安全性考虑,我这里并没有完全关闭防火墙,而是采用需要哪个端口就打开哪个端口,这样也更符合企业使用习惯,也会具有更高的可靠性安全性。

3.2.1 Master节点

# 在三台Master节点执行,开放的端口比worker节点多

# 开放端口

# 启用 UFW 防火墙

ufw enable

# 开放 k8s Master 必需端口

ufw allow 6443/tcp

ufw allow 2379:2380/tcp

ufw allow 10250/tcp

ufw allow 10251/tcp

ufw allow 10252/tcp

ufw allow 10257/tcp

ufw allow 10259/tcp

ufw allow 8472/udp

ufw allow 30000:32767/tcp

ufw allow ssh/tcp

# 开放 kube-vip ARP/VRRP 相关端口

ufw allow 4789/udp

ufw allow 51820/udp

ufw allow 51821/udp

# 验证防火墙规则

ufw status numbered3.2.2 worker节点

# 在四台Worker节点执行

# 启用 UFW 防火墙

ufw enable

# 开放 k8s Worker 必需端口

ufw allow 10250/tcp

ufw allow 8472/udp

ufw allow 30000:32767/tcp

ufw allow ssh/tcp

# 验证防火墙规则

ufw status numbered四、K8S准备工作

4.1 K8S集群软件&容器镜像准备

# 更新源

apt update

# 查看 kubeadm 的包策略(状态与版本来源)

apt-cache policy kubeadm

# 默认安装 K8s 核心组件

# apt install -y kubelet kubeadm kubectl

# 安装指定版本的 K8s 核心组件

# Master节点安装kubelet、kubeadm、kubectl,Worker节点安装kubelet、kubeadm

# apt install -y kubelet=1.35.0-1.1 kubeadm=1.35.0-1.1

apt install -y kubelet=1.35.0-1.1 kubeadm=1.35.0-1.1 kubectl=1.35.0-1.1

# 版本锁定(防止自动更新)

# Worker节点锁定kubelet、kubeadm

# apt-mark hold kubelet kubeadm

apt-mark hold kubelet kubeadm kubectl

# 版本解锁(允许更新)

sudo apt-mark unhold kubelet kubeadm kubectl

# 配置 kubelet 的 cgroup 驱动

vim /etc/default/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

# 或者一条命令修改KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

# echo 'KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"' | sudo tee /etc/default/kubelet

# 设置 kubelet 开机自启

systemctl enable kubelet

# 查看 K8s 1.35.0 所需的镜像列表

kubeadm config images list

kubeadm config images list --kubernetes-version=v1.35.0

# 拉取 K8s 1.35.0 镜像(指定 cri-dockerd 容器运行时)

# 这个时候,你没有科学上网应该是拉取不了的,想知道怎么配置可以转到文章第七部分,了解一下。

kubeadm config images pull --cri-socket unix:///var/run/cri-dockerd.sock4.2 云原生负载均衡器kube-vip准备

# 在Master01上执行

# 定义kube-vip所需环境变量

export VIP=192.168.31.200

export INTERFACE=ens18

export KVVERSION=v1.0.3

# 生成kube-vip静态Pod清单并写入K8s静态Pod目录

docker run -it --rm --net=host ghcr.io/kube-vip/kube-vip:$KVVERSION manifest pod \

--interface $INTERFACE \

--address $VIP \

--controlplane \

--services \

--arp \

--enableLoadBalancer \

--leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml

# /etc/kubernetes/manifests/下,生成的kube-vip.yaml文件备份一下

root@hep-k8s-master01:~# cd /etc/kubernetes/manifests/

root@hep-k8s-master01:/etc/kubernetes/manifests# ls

kube-vip.yaml

cp kube-vip.yaml /home/kelsen/kube-vip.yaml

# 将kube-vip.yaml文件复制到hep-k8s-master02节点的对应目录

scp /etc/kubernetes/manifests/kube-vip.yaml hep-k8s-master02:/etc/kubernetes/manifests/

# 将kube-vip.yaml文件复制到hep-k8s-master03节点的对应目录

scp /etc/kubernetes/manifests/kube-vip.yaml hep-k8s-master03:/etc/kubernetes/manifests/五、K8S集群初始化

5.1 kubeadm-config.yaml配置

kubeadm-config.yaml文件的修改是重点,这个搞好了就成功了一半了。

# 生成配置文件样例 kubeadm-config.yaml

kubeadm config print init-defaults --component-configs KubeProxyConfiguration > kubeadm-config.yaml

# 修改这个配置文件以下内容

# advertiseAddress: 192.168.31.201,改成自己的主机地址

# criSocket: unix:///var/run/cri-dockerd.sock 使用cri-dockerd

# name: hep-k8s-master01,自己的主机名

# 增加 certSANs: 认证证书配置,Master节点的主机名和IP都写上啦

#- lb.k8s.hep.cc

#- hep-k8s-master01

#- hep-k8s-master02

#- hep-k8s-master03

#- 192.168.31.201

#- 192.168.31.202

#- 192.168.31.203

# 增加 controlPlaneEndpoint: "lb.k8s.hep.cc:6443",VIP地址和端口

# 增加 podSubnet: 192.168.0.0/12,和Calico 默认 Pod 子网匹配,当然也可以默认。我这里就没修改,采用默认的

# strictARP: true

# mode: "ipvs"

vim kubeadm-config.yaml

localAPIEndpoint:

advertiseAddress: 192.168.31.201

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

name: hep-k8s-master01

apiServer:

certSANs:

- lb.k8s.hep.cc

- hep-k8s-master01

- hep-k8s-master02

- hep-k8s-master03

- 192.168.31.201

- 192.168.31.202

- 192.168.31.203

controlPlaneEndpoint: "lb.k8s.hep.cc:6443"

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 192.168.0.0/12

...

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: true

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

text:

infoBufferSize: "0"

verbosity: 0

metricsBindAddress: ""

mode: "ipvs"

nftables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

nodePortAddresses: null5.2 master节点配置

# kubeadm 初始化前修改 kube-vip.yaml

sed -i 's#path: /etc/kubernetes/admin.conf#path: /etc/kubernetes/super-admin.conf#' /etc/kubernetes/manifests/kube-vip.yaml

# kubeadm 初始化后恢复 kube-vip.yaml

# sed -i 's#path: /etc/kubernetes/super-admin.conf#path: /etc/kubernetes/admin.conf#' /etc/kubernetes/manifests/kube-vip.yaml

# K8s 集群初始化命令

kubeadm init --config kubeadm-config.yaml --upload-certs -v=9

# 输出内容

...

I1224 16:15:49.355797 1333 loader.go:405] Config loaded from file: /etc/kubernetes/admin.conf

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes running the following command on each as root:

kubeadm join lb.k8s.hep.cc:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:bc9f80c85cd754eeb87dabcefd42e2ecbd26dd6644ff59bb88008cb397f2c569 \

--control-plane --certificate-key 55ccb68ad350de6c3cee535b1277d13426fbc0b48e7d4c9d9abe22916e69d6fb

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.k8s.hep.cc:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:bc9f80c85cd754eeb87dabcefd42e2ecbd26dd6644ff59bb88008cb397f2c569

root@hep-k8s-master01:~#

# 配置kubectl环境

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# hep-k8s-master02、hep-k8s-master03加入控制节点,一定带上--cri-socket unix:///var/run/cri-dockerd.sock参数

kubeadm join lb.k8s.hep.cc:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:bc9f80c85cd754eeb87dabcefd42e2ecbd26dd6644ff59bb88008cb397f2c569 \

--control-plane --certificate-key 55ccb68ad350de6c3cee535b1277d13426fbc0b48e7d4c9d9abe22916e69d6fb --cri-socket unix:///var/run/cri-dockerd.sock

# hep-k8s-master02、hep-k8s-master03成功加入控制节点后,配置kubectl环境

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config5.3 worker节点配置

# hep-k8s-worker01、hep-k8s-worker02、hep-k8s-worker03、hep-k8s-worker04加入集群,一定带上--cri-socket unix:///var/run/cri-dockerd.sock参数

kubeadm join lb.k8s.hep.cc:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:bc9f80c85cd754eeb87dabcefd42e2ecbd26dd6644ff59bb88008cb397f2c569 --cri-socket unix:///var/run/cri-dockerd.sock5.4 部署Calico

# 应用Calico Operator资源清单(部署Calico控制器)

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.1/manifests/tigera-operator.yaml

# 查看tigera-operator是否为Running

root@hep-k8s-master01:~# kubectl get ns

NAME STATUS AGE

default Active 11m

kube-node-lease Active 11m

kube-public Active 11m

kube-system Active 11m

tigera-operator Active 12s

root@hep-k8s-master01:~# kubectl get pods -n tigera-operator

NAME READY STATUS RESTARTS AGE

tigera-operator-58986cfc84-mqfjj 1/1 Running 0 23s

root@hep-k8s-master01:~#

# 下载Calico自定义资源配置文件

wget https://raw.githubusercontent.com/projectcalico/calico/v3.29.1/manifests/custom-resources.yaml

# 修改自定义资源文件(匹配kubeadm初始化的Pod网络CIDR),我这里没修改,用的默认192.168.0.0

vim custom-resources.yaml

# (修改第13行的cidr为kubeadm init --pod-network-cidr指定的地址,默认为192.168.0.0/16)

# 应用Calico自定义资源配置(完成Calico部署),大概过个五分钟,就都Running状态了

kubectl create -f custom-resources.yaml

root@hep-k8s-master01:~# kubectl get ns

NAME STATUS AGE

calico-apiserver Active 20s

calico-system Active 20s

default Active 15m

kube-node-lease Active 15m

kube-public Active 15m

kube-system Active 15m

tigera-operator Active 3m51s

root@hep-k8s-master01:~# kubectl get pods -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-564c6979db-gqm5p 0/1 Pending 0 33s

calico-node-2ms4h 0/1 Init:0/2 0 33s

calico-node-5vhq4 0/1 Init:1/2 0 33s

calico-node-ffbk8 0/1 Init:1/2 0 33s

calico-node-rw2sw 0/1 Init:ImagePullBackOff 0 33s

calico-node-v9sps 0/1 Init:1/2 0 33s

calico-node-x5brm 0/1 Init:1/2 0 33s

calico-node-zhzg5 0/1 Init:1/2 0 33s

calico-typha-79dbf4db54-f478z 0/1 ContainerCreating 0 27s

calico-typha-79dbf4db54-mnj7h 1/1 Running 0 27s

calico-typha-79dbf4db54-ntbjc 1/1 Running 0 34s

csi-node-driver-79tqj 0/2 ContainerCreating 0 33s

csi-node-driver-b65fb 0/2 ContainerCreating 0 33s

csi-node-driver-dbqpb 0/2 ContainerCreating 0 33s

csi-node-driver-fw5br 0/2 ContainerCreating 0 33s

csi-node-driver-m7fc4 0/2 ContainerCreating 0 33s

csi-node-driver-wclw2 0/2 ContainerCreating 0 33s

csi-node-driver-xbpk4 0/2 ContainerCreating 0 33s

root@hep-k8s-master01:~#

# 此时worker节点的ROLES都是none,看着让人很不舒服,我们修改一下

root@hep-k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hep-k8s-master01 Ready control-plane 22m v1.35.0

hep-k8s-master02 Ready control-plane 18m v1.35.0

hep-k8s-master03 Ready control-plane 17m v1.35.0

hep-k8s-worker01 Ready 15m v1.35.0

hep-k8s-worker02 Ready 14m v1.35.0

hep-k8s-worker03 Ready 14m v1.35.0

hep-k8s-worker04 Ready 14m v1.35.0

root@hep-k8s-master01:~#

# 修改 Worker 节点 ROLES为worker

kubectl label node hep-k8s-worker01 node-role.kubernetes.io/worker=worker

kubectl label node hep-k8s-worker02 node-role.kubernetes.io/worker=worker

kubectl label node hep-k8s-worker03 node-role.kubernetes.io/worker=worker

kubectl label node hep-k8s-worker04 node-role.kubernetes.io/worker=worker

# worker的ROLES被打上worker的label了

root@hep-k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hep-k8s-master01 Ready control-plane 23m v1.35.0

hep-k8s-master02 Ready control-plane 18m v1.35.0

hep-k8s-master03 Ready control-plane 18m v1.35.0

hep-k8s-worker01 Ready worker 15m v1.35.0

hep-k8s-worker02 Ready worker 15m v1.35.0

hep-k8s-worker03 Ready worker 15m v1.35.0

hep-k8s-worker04 Ready worker 15m v1.35.0

root@hep-k8s-master01:~# 六、部署Nginx验证集群可用性

6.1 验证K8S集群网络

root@hep-k8s-master01:~# kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP,9153/TCP 24m

root@hep-k8s-master01:~# dig -t a www.baidu.com @10.96.0.10

; <<>> DiG 9.18.39-0ubuntu0.24.04.2-Ubuntu <<>> -t a www.baidu.com @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 16197

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 1232

; COOKIE: c5c39a4461293870 (echoed)

;; QUESTION SECTION:

;www.baidu.com. IN A

;; ANSWER SECTION:

www.baidu.com. 30 IN CNAME www.a.shifen.com.

www.a.shifen.com. 30 IN A 153.3.238.28

www.a.shifen.com. 30 IN A 153.3.238.127

;; Query time: 204 msec

;; SERVER: 10.96.0.10#53(10.96.0.10) (UDP)

;; WHEN: Wed Dec 24 16:40:39 CST 2025

;; MSG SIZE rcvd: 161

root@hep-k8s-master01:~# 6.2 利用K8S部署Nginx

# 创建一个nginx.yaml文件,其内容如下

vim nginx.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginxweb

spec:

selector:

matchLabels:

app: nginxweb1

replicas: 2

template:

metadata:

labels:

app: nginxweb1

spec:

containers:

- name: nginxwebc

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginxweb-service

spec:

externalTrafficPolicy: Cluster

selector:

app: nginxweb1

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080

type: NodePort

root@hep-k8s-master01:~# kubectl apply -f nginx.yaml

deployment.apps/nginxweb created

service/nginxweb-service created

root@hep-k8s-master01:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginxweb-6799787475-7xnwz 0/1 ContainerCreating 0 8s

nginxweb-6799787475-wvgdf 0/1 ContainerCreating 0 8s

root@hep-k8s-master01:~# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 27m

nginxweb-service NodePort 10.97.67.72 80:30080/TCP 16s

root@hep-k8s-master01:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginxweb-6799787475-7xnwz 1/1 Running 0 36s

nginxweb-6799787475-wvgdf 0/1 ContainerCreating 0 36s

root@hep-k8s-master01:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginxweb-6799787475-7xnwz 1/1 Running 0 54s

nginxweb-6799787475-wvgdf 1/1 Running 0 54s

root@hep-k8s-master01:~# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 30m

nginxweb-service NodePort 10.97.67.72 80:30080/TCP 3m7s

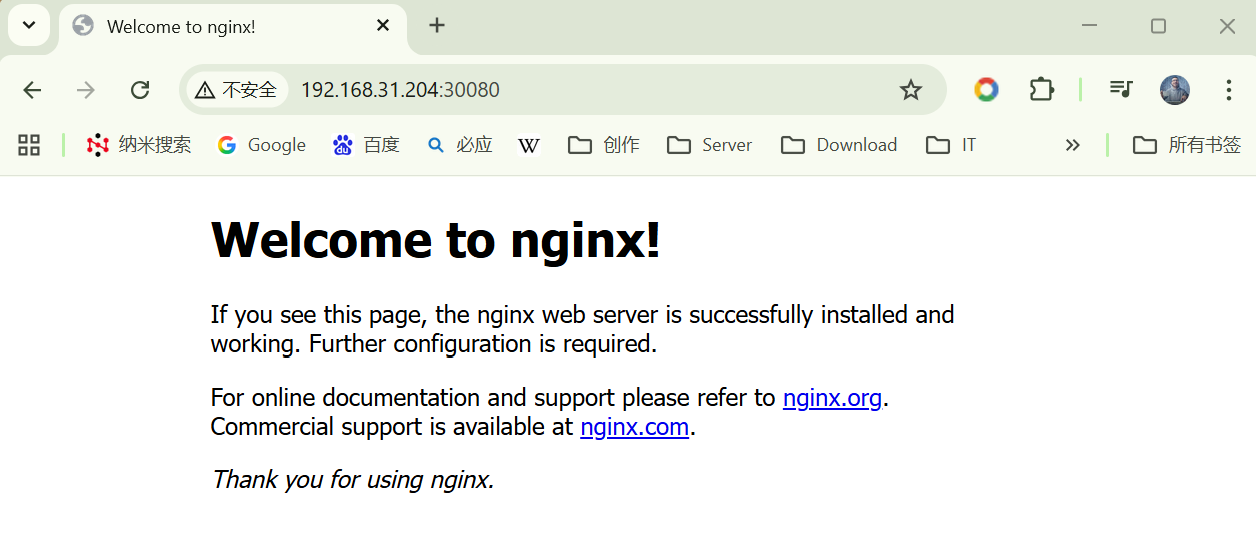

root@hep-k8s-master01:~# 6.3 验证Nginx

# 在Master和worker所有机器上开通30080端口

ufw allow 30080/tcp

# 在局域网浏览器中访问http://192.168.31.204:30080/即可看到Nginx主页

# 三台Master+30080以及四台worker+30080都可以访问Nginx

七、Ubuntu24.04配置镜像加速

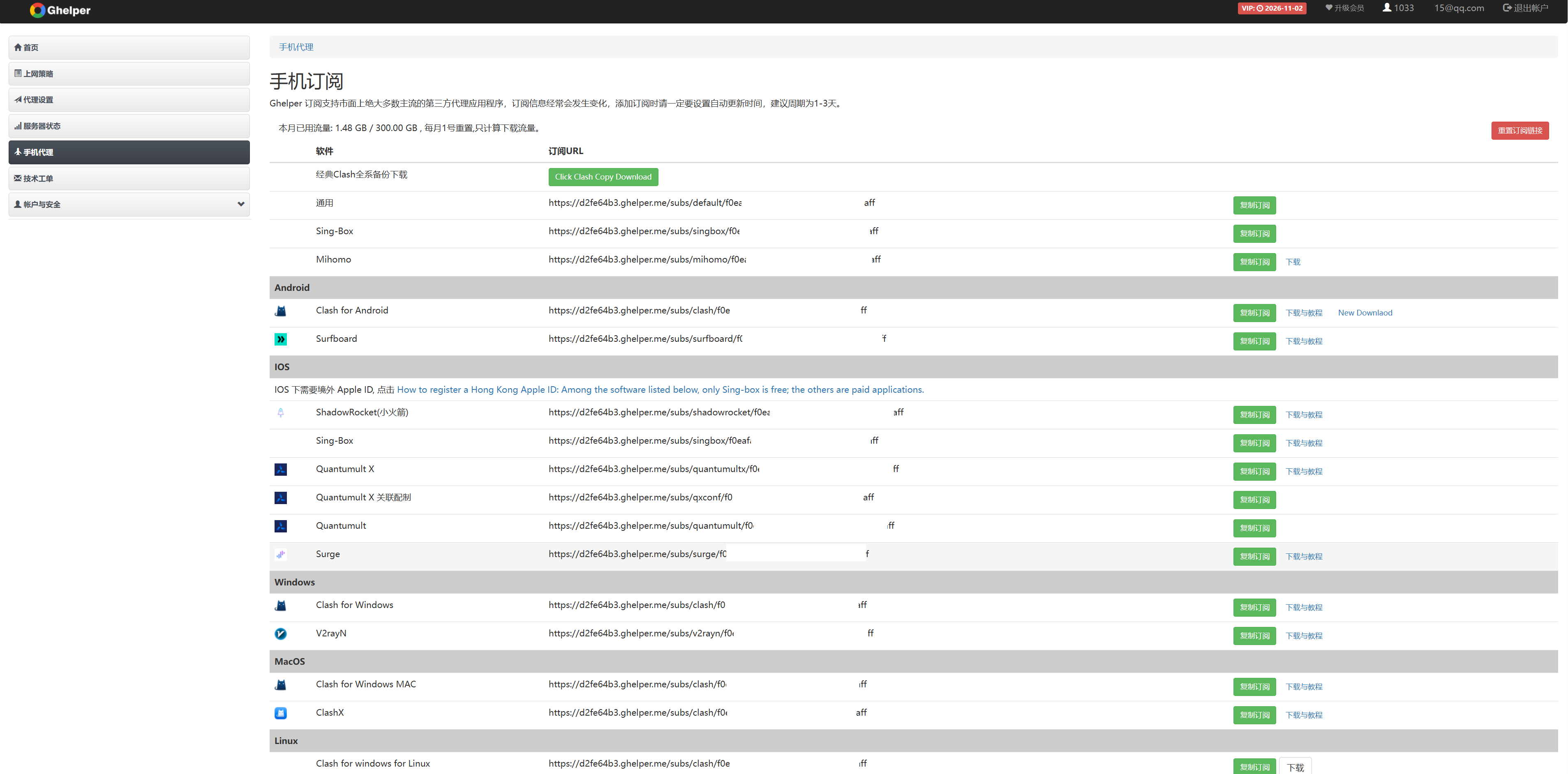

为了更好的科学上网,我这里有自己的Ghelper机场。你也可以使用自己的进行翻墙。

cd /home/kelsen

# 下载带有 -compatible 标识的版本

wget https://github.com/MetaCubeX/mihomo/releases/download/v1.18.9/mihomo-linux-amd64-compatible-v1.18.9.gz

# 解压并替换

gunzip -f mihomo-linux-amd64-compatible-v1.18.9.gz

chmod +x mihomo-linux-amd64-compatible-v1.18.9

mv -f mihomo-linux-amd64-compatible-v1.18.9 /usr/local/bin/mihomo

mkdir -p ~/.config/mihomo

# 将下面的 URL 替换为你图片中 Mihomo 行对应的那个链接

curl -L -o ~/.config/mihomo/config.yaml "你的Mihomo订阅链接"

cd /root/.config/mihomo

curl -L -o Country.mmdb https://github.com/P3TERX/GeoLite.mmdb/raw/download/GeoLite2-Country.mmdb

# 或者curl -L -o /root/.config/mihomo/Country.mmdb https://testingcf.jsdelivr.net/gh/MetaCubeX/meta-rules-dat@release/geoip.metadb

# 手动运行

/usr/local/bin/mihomo -d /root/.config/mihomo

vim /etc/systemd/system/mihomo.service

[Unit]

Description=Mihomo Daemon

After=network.target

[Service]

Type=simple

User=root

ExecStart=/usr/local/bin/mihomo -d /root/.config/mihomo

Restart=always

[Install]

WantedBy=multi-user.target

systemctl enable --now mihomo

export http_proxy=http://127.0.0.1:9981

export https_proxy=http://127.0.0.1:9981

curl -I https://www.google.com

# 强力杀掉所有残留的 mihomo 进程

sudo pkill -9 mihomo

# 确认没有任何进程在占用 9981 端口 (执行后不应有输出)

ss -tlnp | grep 9981

# 重新启动服务

sudo systemctl restart mihomo

# 再次查看状态,确保没有 "bind: address already in use" 报错

sudo systemctl status mihomo

/usr/local/bin/mihomo -d /root/.config/mihomo

vim /etc/apt/apt.conf.d/proxy.conf

Acquire::http::Proxy "http://127.0.0.1:9981";

Acquire::https::Proxy "http://127.0.0.1:9981";

apt-get update

apt-get install -y apt-transport-https ca-certificates curl gpg

systemctl daemon-reload

systemctl restart mihomo

systemctl status mihomo

# 终端变量(export)的持久性

vim ~/.bashrc

# Mihomo Proxy

export http_proxy="http://127.0.0.1:9981"

export https_proxy="http://127.0.0.1:9981"

# 注意:安装 K8S 必须设置 NO_PROXY,否则集群内部通信会报错

export no_proxy="localhost,127.0.0.1,192.168.31.0/24,10.96.0.0/12,192.168.0.0/16,lb.k8s.hep.cc,.svc,.cluster.local"

source ~/.bashrc

# 既然你使用了 cri-dockerd 作为容器运行时,那么镜像的实际拉取操作是由 Docker 守护进程 (Docker Daemon) 完成的。仅仅在终端执行 export http_proxy 对 kubeadm 命令本身有效,但无法传递给后台运行的 Docker 服务。你必须为 Docker Service 配置持久化的环境变量。

mkdir -p /etc/systemd/system/docker.service.d

vim /etc/systemd/system/docker.service.d/http-proxy.conf

[Service]

Environment="HTTP_PROXY=http://127.0.0.1:9981"

Environment="HTTPS_PROXY=http://127.0.0.1:9981"

Environment="NO_PROXY=localhost,127.0.0.1,192.168.31.0/24,lb.k8s.hep.cc,.cluster.local"

systemctl daemon-reload

systemctl restart docker

# 确保终端当前也有代理变量(为了 kubeadm 访问 api 获取镜像列表)

export http_proxy=http://127.0.0.1:9981

export https_proxy=http://127.0.0.1:9981

# 执行拉取

kubeadm config images pull --cri-socket unix:///var/run/cri-dockerd.sock八、集群优雅开关机

8.1 K8S集群关机

# 如果是为了长期停机或维护,建议先清空节点。如果只是临时重启,可跳过此步。

# 在 master01 执行,循环处理 worker 节点

kubectl drain hep-k8s-worker01 --ignore-daemonsets --delete-emptydir-data

# 对其他 worker02-04 重复此操作

kubectl drain hep-k8s-worker02 --ignore-daemonsets --delete-emptydir-data

kubectl drain hep-k8s-worker03 --ignore-daemonsets --delete-emptydir-data

kubectl drain hep-k8s-worker04 --ignore-daemonsets --delete-emptydir-data

# 关闭所有 Worker Nodes

# 依次登录到四台 Worker 节点(01-04),执行关机

# 停止 kubelet,防止它在关机过程中尝试拉起容器

sudo systemctl stop kubelet

sudo systemctl stop containerd

sudo shutdown -h now

# 逐个关闭 Master 节点 (关键)

# 先关 Master 02 和 Master 03

sudo systemctl stop kubelet

sudo systemctl stop containerd

sudo shutdown -h now

# 最后关 Master 01 (VIP 承载者): 最后关闭持有 VIP 的节点,确保控制平面在关机最后一刻依然可用。8.2 K8S集群开机

# 同时开启 Master 01, 02, 03

# 检查 kube-vip: 由于使用了 kube-vip,它通常作为静态 Pod 运行。Master 节点启动后,检查 VIP 是否能够 Ping 通

ping 192.168.31.200

# 检查控制平面状态: 登录到 Master 01,观察核心组件和 etcd 状态

kubectl get nodes

kubectl get pods -n kube-system

# 启动 Worker 节点

# 一旦 kubectl get nodes 显示 Master 节点为 Ready 状态,即可启动所有 Worker 节点

kubectl uncordon hep-k8s-worker01

kubectl uncordon hep-k8s-worker02

kubectl uncordon hep-k8s-worker03

kubectl uncordon hep-k8s-worker04Reference:

官方文档k8s1.30安装部署高可用集群,kubeadm安装Kubernetes1.30最新版本:https://blog.csdn.net/weixin_45652150/article/details/138492600

ubuntu22.04安装Kubernetes1.25.0(k8s1.25.0)高可用集群:http://www.huerpu.cc:7000/?p=432

60分钟极速部署企业级kubernetes k8s 1.35集群:https://www.bilibili.com/video/BV1oNqkBzEuy/